Our strong R&D team prototypes and implements the most advanced algorithms into our products to provide the best technology to our customers.

Visit this page each month to see newer R&D updates and results.

At night, the headlights of a vehicle illuminate the scene and can be captured as a standalone object or as a large extension of the vehicle in traditional video analytics solutions. Even in situations where the object is not seen by the camera, the headlight beam of a passing car may trigger false alarms. intuVision VA provides an intelligent headlight glare removal option that can mitigate such issues.

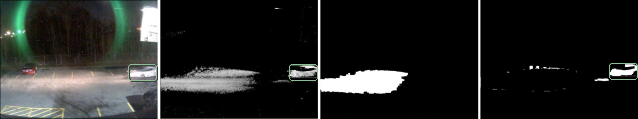

The images below demonstrate the new technique. As can be seen, the vehicle is correctly detected as a foreground object, while the pixels illuminated by the headlight are removed from the final processed image.

From left to right: 1) A vehicle, with the headlight turned on, enters the camera view. Note that only the vehicle is detected (a result of the glare removal technique). 2) The pixels illuminated by the headlight beam and the vehicle pixels get a high probability of belonging to the foreground. 3) The detected headlight beam pixels are filtered out. 4) The final processed image, devoid of any headlight beam false positives. The vehicle is correctly detected (indicated by the green bounding box).

More InformationTraditional background subtraction methods make use of the RGB color space to model the scene background. This approach has an inherent limitation related to handling illumination changes, as the RGB channels are affected by illumination. As the scene gets brighter, the RGB values increase in unison, and vice versa. Thus, as clouds pass over a scene, the scene darkens and the RGB model detects a change, resulting in false positive detections.

In addition to a RGB background model, we also support a model based on the CIEL*a*b* color space. This color space approximates the human visual system, with the a* and b* channels capturing color information based on the opponent color axis (like the human eye), while L* captures a lightness value, similar to human perception. As the illumination and color information is decorrelated, we detect changes in illumination and control the response of the background model.

The image below demonstrates our effective handling of the illumination changes. The left image shows the response of the CIEL*a*b* model when there is bright sunlight, while the right shows the response when the scene darkens due to cloud cover. As can be seen, the background model correctly handles significant illumination changes and does not create false positive detections, specifically in the lower left corner of the image, where the greatest illumination change occurs.

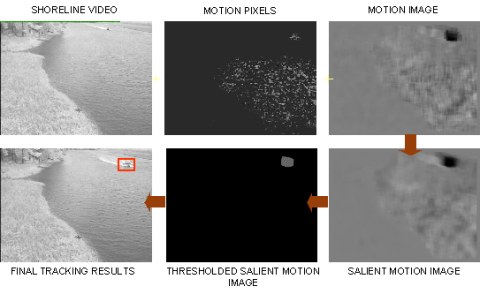

intuVision VA video analytics technology uses advanced algorithms to differentiate between the motion of typical foreground objects that are of interest and unwanted motion due to dynamic backgrounds. These algorithms are what allow intuVision VA to detect a boat, while ignoring the rippling of the water. Motion due to dynamic backgrounds is mostly inconsistent or repetitive when observed over multiple frames. Foreground objects on the other hand tend to have consistent motion and hence they produce a highly salient motion. We use this observation to detect foreground objects while eliminating false alarms due to the background motion as illustrated below:

Shadows of objects, whether they are moving (e.g. people and vehicles) or stationary (e.g. trees or buildings), can cause problems in the tracking detection of objects of interest. Most existing shadow detection and removal algorithms require cumbersome calibration or training and are not easy to set up and use. intuVision has developed novel algorithms to remove shadows from un-calibrated video cameras without any training phase, making it easy to use and deploy. Some examples of automated shadow removal are shown below:

The intuVision personnel has several publications in the areas of computational video, video surveillance, biometric systems, integrated solutions and event understanding. The following is the articles list, if you want to read the whole paper, please contact us.